Overview

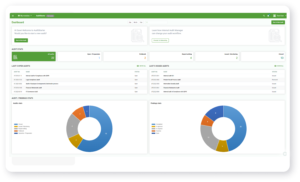

A data pipeline is essential for collecting and moving numerous data sources to a centralized location for data processing, but they are not entirely bulletproof. Robust data pipeline monitoring is essential to maintain data quality and reliability, identify network errors, and respond to issues quickly. The first step is to identify data pipeline monitoring metrics, such as volume, latency, or data quality metrics. Next, you must harness data pipeline monitoring tools to collect, visualize, and analyze the metrics. Using a data pipeline monitoring tool allow engineers to quickly identify and resolve issues as they appear. Our data pipeline monitoring solution provides real-time visibility, customizable and automated alerts, and predictive analytics for seamless data flow.