- 1. My Study on AI-Assisted Development

- 2. We Want to Use GenAI but We’re Mostly Using It on Our Own

- 3. The Illusion of Competence Is Real and Worth Watching

- 4. From Passive Permission to Strategic Adoption: How Organizations Respond to AI in Software Development Lifecycle

- 5. How Organizations and Teams Should Adapt to the Rise of AI in Software Development

- 6. FAQ

The rapid advancements and adoption of GenAI is reshaping how software is being created. From code completion and documentation generation, to supporting decision making, such as exploring architectural options and evaluating their trade-offs, GenAI is becoming a daily companion for many of us. But how willing are we to use it in our everyday tasks, and how ready are the organizations we work in to support us in this?

To explore this question, I conducted a study investigating how software professionals adopted AI tools to support them in day-to-day work. The results of this study also set a benchmark for companies that are currently working on modernizing their software development processes using AI.

My Study on AI-Assisted Development

The study followed a mixed-method research design, combining qualitative and quantitative approaches to gain a comprehensive understanding of how generative AI is being adopted across software development lifecycle.

The qualitative phase consisted of semi-structured interviews with professionals from diverse roles, including developers, tech leads and managers. The participants represented a range of organizational contexts and levels of experience with GenAI tools. The interviews focused on use-cases, benefits and limitations, and the broader impact of GenAI on the future of IT.

The insights from the interviews were then used to design the quantitative survey, which gathered responses from 100 professionals who work with code on a daily basis. The survey included people from different countries and aimed to validate the popularity of the opinions gathered in the interviews across a larger research sample.

We Want to Use GenAI but We’re Mostly Using It on Our Own

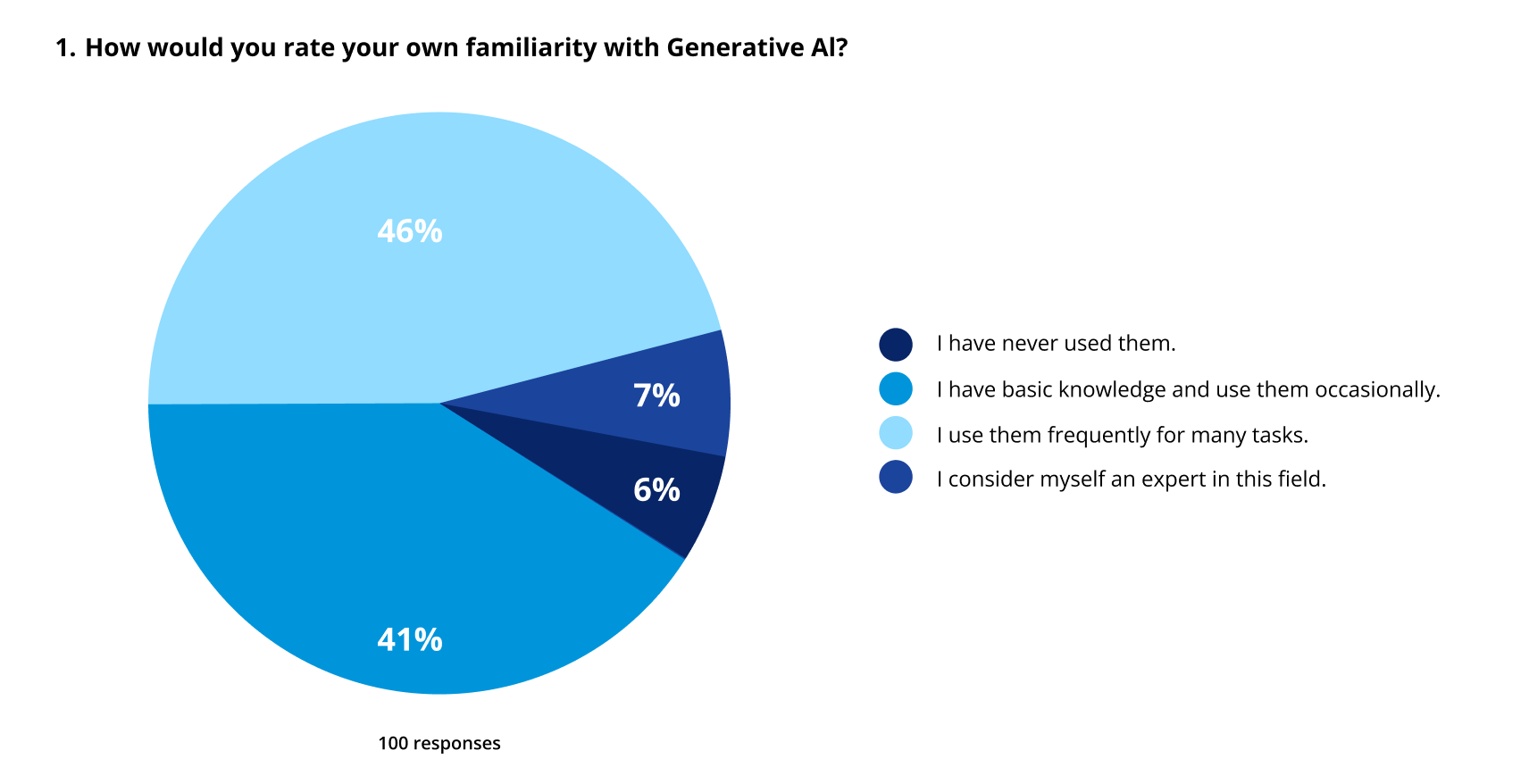

Generative AI is no longer a novelty in software development. In fact, as shown in Fig. 1, 94% of the survey respondents declared they use GenAI tools for their work tasks at least occasionally, with half of them: frequently and for many tasks. There was also a small group of GenAI experts and they made up 7% of the survey research sample. Participants of the in-depth interviews also represented a diverse range of familiarity and attitude towards GenAI: from cautious and skeptical users applying it only in simple, repetitive tasks, to experienced professionals who actively explored its broader potential.

Figure 1. Respondents’ GenAI familiarity level

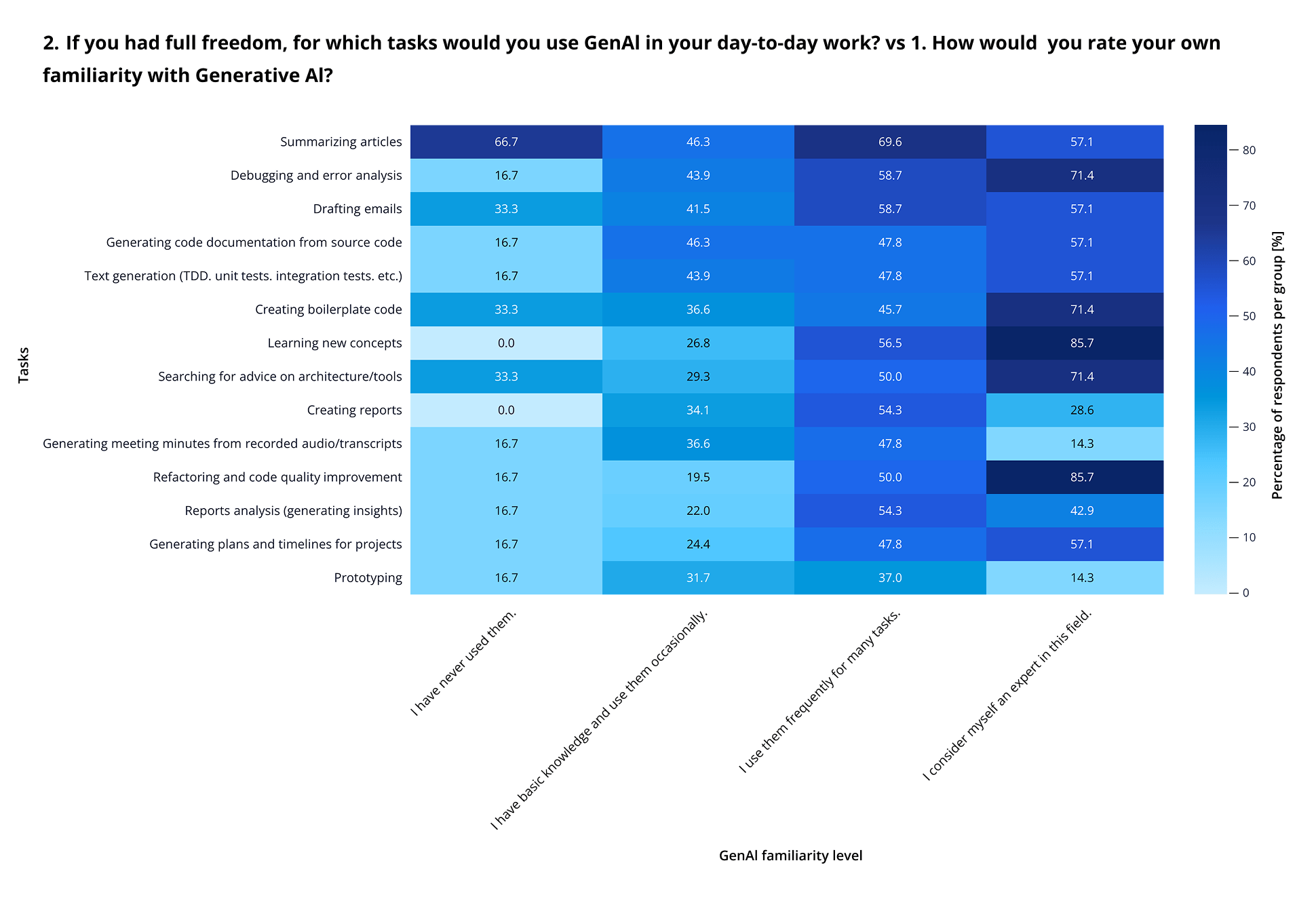

Both the interviews and the survey showed that users prefer using AI primarily for repetitive and low-complexity tasks: boilerplate code generation, writing unit tests, creating documentation, or summarizing content. In contrast, creative and more complex tasks, such as researching new concepts and architecture/tools, or code refactoring, are less popular, especially among less experienced users. But interestingly, these are the most common use cases for respondents with advanced knowledge of GenAI. For example, code refactoring was one of the top use cases among experts, while at the same time, it was one of the least popular for beginners. What’s more, the expert group gave very consistent answers in the survey, which may indicate that the use cases they point to are particularly worth noticing and paying attention to. The heat map illustrating the relationship between GenAI familiarity and preferred use cases is shown below (see Fig. 2).

Figure 2. GenAI familiarity vs preferred use cases

These patterns of usage are also reflected in user perceptions. Nearly 70% of the respondents see AI as a “game changer” that could become a standard part of their workflow. There’s a clear correlation: the more familiar users are with these tools, the more confident they feel about that statement. What’s more, 53% of respondents declared they would feel comfortable having large parts of their code or documentation generated by AI, when the responsibility for the final outcome lies on them. Almost 80% agreed that using AI for repetitive tasks would significantly boost their productivity, highlighting just how strongly individual users perceive its value, especially for low-complexity tasks.

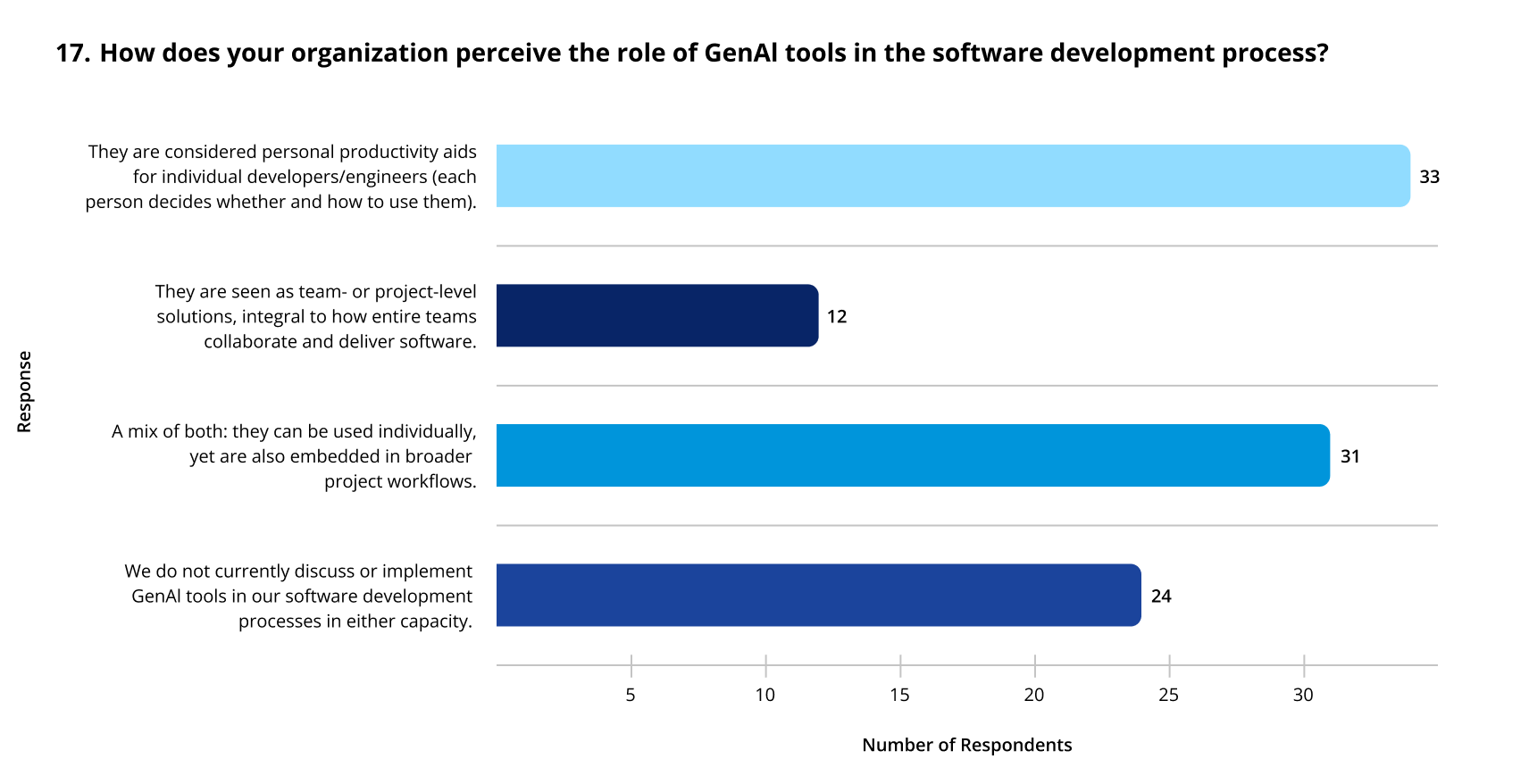

The findings also suggest that while GenAI is widely used, it is still rarely adopted as a fully coordinated team practice. According to the survey, in 33% of cases GenAI is used only on an individual basis, while 31% of organizations combine it with occasional team collaboration. Only 12% reported having shared team guidelines or structured processes (see Fig. 3). In most workplaces, AI remains a personal tool rather than a collective one, and in the remaining 24% these tools are not implemented in either capacity. However, some interview participants pointed out that introducing more team-level AI practices could support knowledge sharing and help foster a culture focused on high code quality.

Figure 3. Organizations’ perception of the role of GenAI tools in the software development process

The Illusion of Competence Is Real and Worth Watching

One of the recurring themes in the interviews was the so-called illusion of competence: a situation where overreliance on AI, without an effort to understand the generated output, leads users to later overestimate their skills. Most interview participants agreed that excessive dependence on GenAI can pose a risk, especially for less experienced developers, and all of them emphasized the importance of verifying AI-generated results and maintaining critical thinking.

This issue was also addressed in the survey: when asked whether AI tools might create a false sense of competence, most respondents expressed concern, but the answers differed depending on the user’s experience in the GenAI field. All experts agreed that this risk is real, while users with less knowledge who used AI often and for many tasks were more likely to downplay it. This group had the biggest percentage of answers indicating lack of concern for the false sense of competence. This begs the question: are some users already experiencing this illusion without realizing it? These results highlight the need for more education and awareness around verification of AI-generated content.

External research also reinforces this concern. A study on GitHub Copilot found that while AI-generated code was comparable in quality to human-written code, developers paid significantly less attention to it during visual inspection. Using eye-tracking, researchers observed that developers looked at Copilot’s suggestions less often and for shorter periods, suggesting a tendency to trust the tool too much (a phenomenon known as automation bias). This kind of overreliance can increase the risk of overlooking errors and contribute to long-term issues such as technical debt.

From Passive Permission to Strategic Adoption: How Organizations Respond to AI in Software Development Lifecycle

While individuals are eager to use AI at work, organizational readiness to embrace these tools varies widely. The survey looked into how GenAI is used and supports software development life cycle processes across companies and the results showed a mixed picture.

Large companies, as expected, tend to have more restrictions, but they also establish clearer standards for AI use. Their greater resources allow them to introduce comprehensive regulations and AI frameworks. Overall, most organizations seem to be positively inclined toward generative technologies, but a passive approach is still predominant. Companies allow employees to use AI tools, yet in most cases offer little active support or direction (regulated industries are an exception).

Only some large organizations present a proactive stance: one that goes beyond enabling individual productivity, toward team- or project-level integration, and investment in training on how to develop software using AI tools. Still, such investment remains rare: only 11% of the organizations provide GenAI training for their developers. Overall, large companies are either more restrictive, or more deliberate, while smaller organizations often fall somewhere in between, with a wait-and-see attitude.

The research confirms that professionals are ready to use AI tools in their work, and it’s now up to organizations to move from passive permission to strategic AI adoption. This includes establishing standards, planning education, and integrating AI with existing tools and processes. The impact of generative technologies on today’s job market is undeniable and their role will only grow in the future.

How Organizations and Teams Should Adapt to the Rise of AI in Software Development

There is no one-size-fits-all recommendation when it comes to using GenAI in software development. The impact and implementation strategies depend heavily on the organization’s size, maturity, workflows, and readiness for change. While individual use is a good starting point, meaningful benefits emerge when teams adopt GenAI intentionally and with shared standards. We must also not forget about the very dynamic development of the tools themselves (e.g. GitHub Copilot) as well as the significant improvement of the AI models themselves (e.g. Anthropic Claude). In 2025 alone, we witnessed several updates of AI models, each one increasing benchmarks in code generation tasks. Therefore, it is necessary to carefully observe the further development of these tools and their adaptation to the internal needs of the organization.

In the follow-up to this article, we will present a high-level framework to help organizations evaluate where and how to integrate generative technologies in a way that matches their goals, resources, and development culture.

FAQ

1. How widely is GenAI used among software professionals?

According to the survey, 94% of respondents already use GenAI tools in their daily work, most commonly for repetitive or low-complexity tasks such as documentation or boilerplate code generation.

2. What are the biggest risks of relying on GenAI in software development?

The main risk identified is the “illusion of competence”: overtrusting AI-generated output without fully understanding it, especially among less experienced users. This can lead to errors and long-term technical debt.

3. How can teams mitigate the risk of “illusion of competence” when using GenAI?

Teams can adopt lightweight peer-review rituals focused specifically on AI-generated code. For example, developers can mark which parts of a pull request were AI-assisted, prompting reviewers to pay extra attention. Some companies also introduce “AI hygiene checklists” that include verifying assumptions, validating dependencies, and re-running tests with edge cases. These safeguards help maintain code quality without slowing down productivity.

4. What skills will become more important as GenAI becomes standard in software development?

Beyond coding, developers will increasingly need skills in prompt design, architectural reasoning, and critical evaluation of model output. The ability to break down problems clearly and translate them into precise prompts will become as important as knowing specific frameworks. Equally crucial are soft skills such as collaboration and documentation, since teams need shared practices for working with AI responsibly.

5. How can smaller companies start building GenAI-ready development processes?

They don’t need enterprise-scale frameworks. A practical starting point is creating a shared “AI playbook” with simple rules: which tools are approved, what types of tasks should be AI-assisted, when human verification is required, and how to handle sensitive data. This can later evolve into training sessions, code templates, or automated linting rules that incorporate AI best practices.

6. What changes can we expect in the software development lifecycle as GenAI tools improve?

As AI-driven tools become more capable, we’ll likely see a shift toward more exploratory and iterative development. Tasks like initial code scaffolding, test generation, and basic documentation may become fully automated. Human effort will move toward higher-level design, validation, and decision-making. Over time, AI could act as a “junior architect,” helping evaluate trade-offs or simulate system behavior before coding even begins.

Would you like more information about this topic?

Complete the form below.