- 1. Imperative of Careful Implementation

- 2. Risks Associated With AI Implementation

- 3. Mitigation Strategies for Business and Project Leaders: Best Practices for Safe AI Deployments

- 4. AI Security Frameworks: Industry Best Practices

- 5. Real-World Examples of Business Consequences from Unsafe AI

- 6. Navigating AI Regulations: Security, Standards, and Responsibility

- 7. Summary

You don’t need to build autonomous robots to face serious AI risks. For most businesses, the biggest threats come from something far more familiar: deploying AI tools into everyday operations without the right guardrails.

As generative AI and intelligent agents become part of standard workflows in areas like customer support, document processing, analytics, or IT automation, organizations are discovering that impressive results can come with hidden vulnerabilities. From small inconsistencies in generated text (e.g. customer complain answer not fully aligned with organization’s politics) to unmonitored decision-making (which is becoming important matter is realms of EU AI Act). The gap between technical capability and responsible use is widening.

According to McKinsey’s 2025 report on the state of AI, companies are beginning to respond: active risk management around AI grew noticeably in late 2024, especially in areas like cybersecurity, regulatory compliance, and reputational risk. Still, oversight of AI-generated outputs remains inconsistent: while 27% of organizations review all generative AI outputs before use, 30% report that a vast majority of such content goes unchecked. This is just one aspect of security risks associated with AI that calls for organization-wide governance to prevent costly missteps.

AI safety has two faces: the first one is alignment and ethics, the second is data safety and solution security. In this article, we want to explore the practical security and safety challenges of bringing AI into real-world business environments. Ethical AI and hallucinations is an important and widely discussed topic in AI space, but because it is also related with cultural values of where certain solution is deployed – we will leave this topic for separated article in the future.

Based on C&F’s field experience and the latest industry standards, we’ll walk through the most pressing risks and offer actionable strategies to help you mitigate them. Our goal is to support AI implementers, tech leads, and business professionals who are responsible for turning AI from proof-of-concept into production.

Imperative of Careful Implementation

It’s never been easier to start using AI, but that’s exactly what makes it risky. While experimentation is essential, deploying AI without a structured and cautious approach can introduce serious security and safety vulnerabilities. Just like any software system, AI must be implemented thoughtfully, with attention to architecture, access, and accountability.

One of the most pressing issues in 2025 is the rise of shadow AI—unaudited or unofficial models integrated outside of centralized IT governance. These third-party or employee-adopted models often lack proper vetting and may contain hidden security flaws, generate unreliable or falsified outputs, or even enable jailbreaking or prompt injection attacks by design.

To mitigate these risks, organizations should lean on trusted providers and follow guidance from established security frameworks. Recommended standards include:

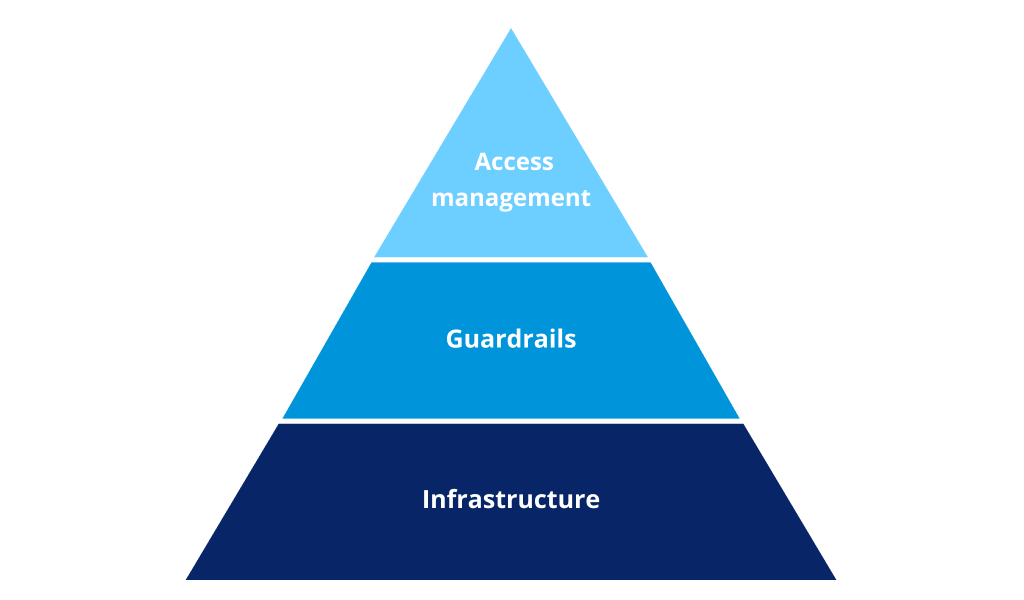

We can think of AI security in three main layers:

- access management for end users (with proper role management for different types of data, especially through conversational interfaces),

- guardrails: solution specific security rules applied to user inputs as well as AI model outputs,

- Infrastructure, understood as enterprise level security.

Equally important is understanding what data AI models (especially Large Language Models hosted in cloud environments) can access. If an AI system is integrated with internal documents, databases, or communication platforms, it may surface or infer private information that users can then extract—deliberately or inadvertently. Proper data access controls and visibility are essential before deployment.

Access control also plays a pivotal role. Organizations must define who can interact with AI systems and under what conditions. Role-based access, consistent permission frameworks, and strong authentication policies help ensure that AI does not become a point of privilege escalation or abuse.

Moreover, monitoring and observability are non-negotiable. As with any complex software system, not all vulnerabilities will be known at the time of deployment. Logging, auditing, anomaly detection, and feedback loops are essential for identifying and responding to unexpected behaviors or threats over time.

Finally, AI is not an exception in the broader security ecosystem. All traditional infrastructure protections: network security, endpoint protection, data encryption, firewalling, patching—remain critical. You should treat AI as just another entry point for malicious actors. Don’t stop at purely technical measures. Educating your teams to become responsible AI users has to be a part of the journey towards safe AI.

Risks Associated With AI Implementation

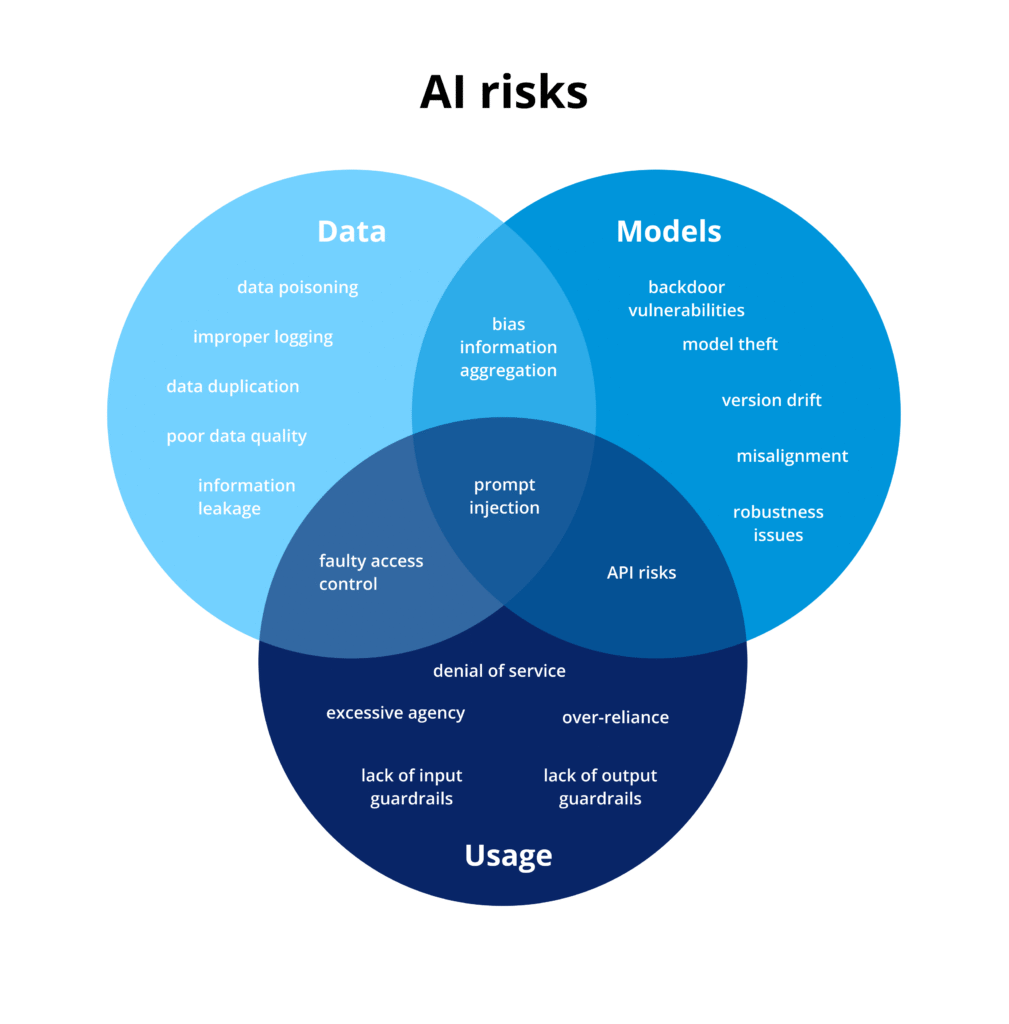

In this guide, we explore the three core dimensions of AI-related risk: data, models, and usage, alongside corresponding mitigation strategies tailored for business deployments. This approach is informed by C&F’s experience in consulting on, building, and auditing AI systems across industries. It draws on established security frameworks to highlight best practices and concludes with real-world examples of how unsafe AI can lead to losses across four key areas: financial, reputational, legal, and operational.

Data Risks

Enterprise AI systems often pull from large volumes of internal data documents, emails, chat logs, databases, and other business-critical content. But if that data is sensitive, outdated, or poorly governed, it can become a major source of risk when used in AI applications.

Common vulnerabilities include the leakage of confidential information, data poisoning through adversarial inputs, and unintended exposure of data across internal user roles. Additionally, improperly curated or poorly classified data can degrade performance or lead to critical errors in AI outputs. Let’s take a look at the most common data risks.

- Leakage of Sensitive Information: exposing confidential data either to external users or internally across departments and roles.

- Data Poisoning Attacks: manipulating training data to subtly alter AI behavior, which results in degrading model performance or creation of backdoors. This risk applies both: to classic ML models trained internally in organizations, and RAG architecture-based solutions, where poisoned data may be injected into knowledge base.

- Poor Data Quality / Garbage In–Garbage Out: feeding AI bad data guarantees bad results. Even the most advanced models will mislead if trained or prompted with low-quality inputs.

- Risks from LLM Knowledge Bases and Embedded Context Stores: many AI apps build separate knowledge bases to ground LLMs. But this creates two major risks: duplicated data can bypass existing access controls, and outdated permissions are hard to keep in sync

- Improper Data Retention and Logging: logging user inputs or model interactions without safeguards can create new stores of sensitive data, increasing the risk of breaches or misuse over time.

- Lack of Data Segmentation and Role-Based Access: without strong data partitioning and role-based access, AI can blur boundaries between teams or user groups

- Privacy Risks from Data Aggregation: AI systems often aggregate data from multiple sources, potentially enabling the inference of personal or confidential information, even if individual data points seem harmless in isolation.

Model Risks

At the heart of every AI-based system, there is a AI model. The AI model is an algorithm and/or neural network architecture trained to process inputs, generate outputs, and guide decisions. Whether using proprietary models, open-source options, or third-party APIs, organizations must assess the trustworthiness, robustness, and alignment of the models they integrate.

Different use cases demand different capabilities, and not all models are created and maintained with enterprise-level scrutiny. Some are updated frequently without clear changelogs. Others are trained on unknown or unverifiable datasets. Many are built for general use, not necessarily aligned to your business goals, data integrity needs, or safety expectations.

And even strong models can behave unpredictably under real-world workload. They might misinterpret unstructured data, respond oddly to edge-case inputs, or surface hidden vulnerabilities when paired with sensitive internal systems. Let’s break down some of the most common AI model risks.

- Use of Unverified or Untrusted Models: models from unvetted sources can hide backdoors, vulnerabilities, or simply lack support. Sticking with trusted providers like Microsoft, Anthropic, Google, or OpenAI offers stronger oversight, regular patching, and more predictable performance.

- Bias and Misalignment: even well-trained models can show bias or produce subpar outputs. These risks are difficult to eliminate and can affect both internal decisions and customer interactions.

- Model Robustness and Output Stability: LLMs are inherently non-deterministic, and might give different answers to the same question. While this can feel natural in conversation, it complicates validation, reproducibility, and auditability

- Resistance to Model Extraction and Theft: AI models can be stolen one query at a time. Rate limits, watermarks, and monitoring help keep it safe.

- Version Drift and Lifecycle Management: model updates can sometimes cause disruption. Version pinning and testing can help you avoid surprises.

- API Security and Exposure Risks: API-based model access introduces new attack surfaces. From unauthorized access and rate-limit bypasses to prompt injections and malicious inputs designed to manipulate outputs or steal data.

- Mismatch Between General-Purpose Models and Task-Specific Expectations: foundation models are built for broad language understanding, not specialized tasks. The gap between general training and domain-specific expectations is a major risk factor for errors and loss of trust.

Usage Risks

AI systems don’t operate in a vacuum, they interact with people. And that interaction is often another source of risk. Whether it’s a well-meaning, untrained employee misusing a tool, or a bad actor trying to manipulate it, user behavior introduces one of the most unpredictable dimensions of AI threats.

Usage risks often stem from insufficient guardrails, unclear access controls, or giving AI agents excessive autonomy over sensitive systems and data. They are further amplified by the unpredictability of user inputs, especially in natural language interfaces, where malicious or simply unstructured interactions can lead to application errors, policy violations, or serious security incidents.

- Lack of Output Guardrails: without strict controls, AI outputs can generate risks by triggering unsafe or costly actions

- Lack of Input Guardrails: users don’t always interact with AI as intended. Without input validation, free-form prompts can cause errors, performance issues, or incorrect actions, leading to lost time, frustration, and even unsafe autonomous behavior.

- Autonomous Agent Misuse: unchecked AI agents can put sensitive data and systems at risk. Human oversight keeps them in line.

- Prompt Injection Attacks: prompt injection is a top AI security threat. Malicious inputs can bypass safety filters, expose internal data, or coerce models into unintended actions, compromising logic, confidentiality, and outputs.

- Denial-of-Service (DoS) and Abuse Attacks: repeated queries can spike bills or slow performance, making rate limiting, quotas, and usage monitoring essential defenses.

- Model Theft via Usage Interfaces: high-volume queries and interaction patterns can enable adversaries to clone behavior or extract proprietary knowledge.

- Over-Reliance and Blind Trust: in enterprise settings, users often mistake AI’s confidence and speed for accuracy. This false trust erodes human oversight, leading to unverified decisions being automated.

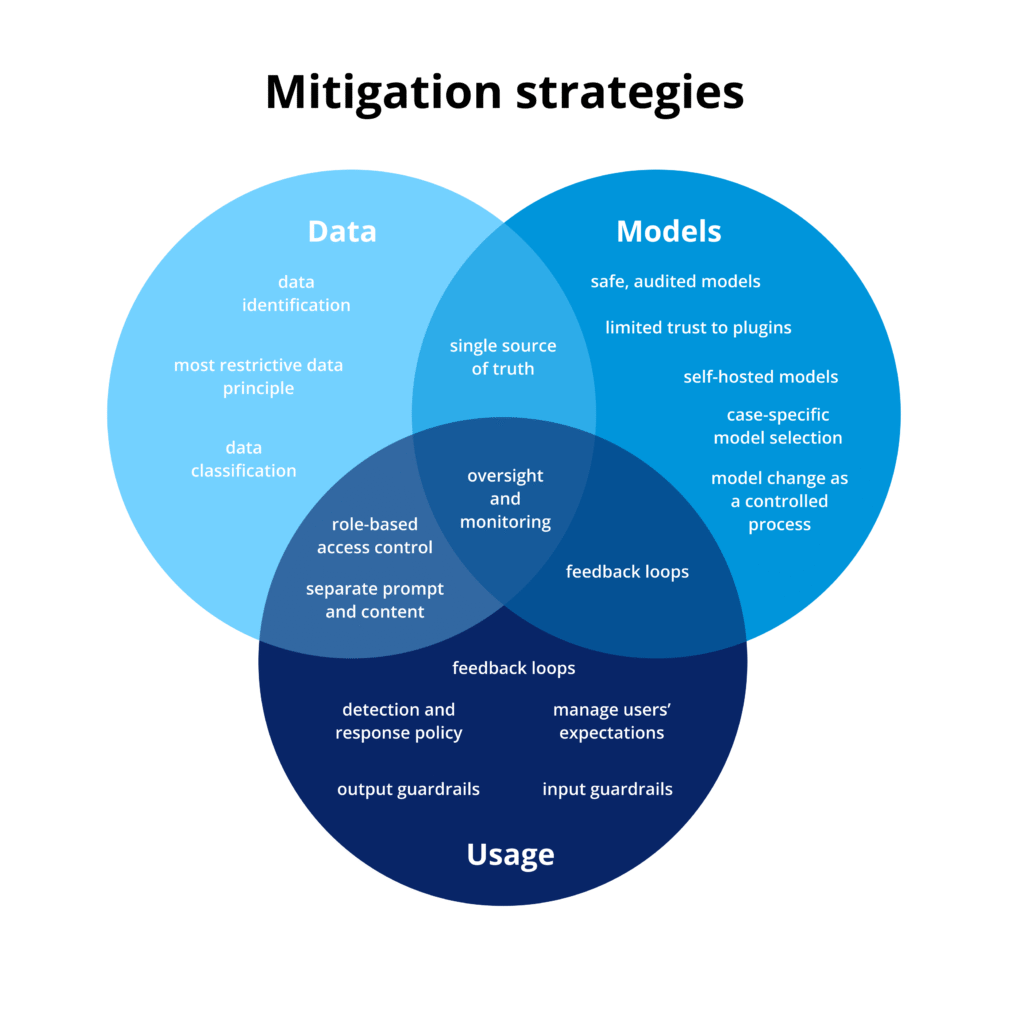

Mitigation Strategies for Business and Project Leaders: Best Practices for Safe AI Deployments

\The foundation of secure and effective AI deployment is not just technical but calls for strategic planning. Without a coordinated approach, your business can experience a fragmented adoption, unmanaged risks, and operational inefficiencies. Below, you’ll find an outline of an AI deployment strategy, drawn from C&F’s practical experience with data-intensive projects and safe AI implementations across diverse organizational contexts.

Establish a Unified AI Deployment Strategy

Every organization should have a comprehensive, written AI deployment strategy that isn’t just a nice-to-have policy, but a binding framework everyone follows. One of the biggest problems we see today is AI chaos. Individual teams experimenting with various models, different agentic frameworks, or just not having clear security requirements to follow.

Just as organizations formally evaluate and audit new software, they must systematically assess and manage all AI tools and integrations. No matter if they are internal solutions, external APIs, or embedded assistants in common productivity tools. A well-defined strategy will help you address all technical, legal, security, and ethical considerations at every stage of AI integration, and keep business leaders informed about AI usage across your organization.

Ensuring Safe Data Management in AI Deployments

Mitigating data-related risks begins with recognizing that data is both the fuel and the potential fault line of any AI system. The way data is sourced, stored, accessed, and governed plays a critical role in ensuring the safety, accuracy, and compliance of AI-driven applications. Rather than treating data access as an operational detail, organizations should approach it as a central design decision; ensuring that only relevant, curated, and appropriately classified data is made available to AI systems.

A proactive data strategy must therefore go beyond simple availability. It should include careful onboarding processes, strong access controls, role-based permissions, and continuous monitoring of how data flows through AI pipelines. Crucially, organizations must resist the temptation to duplicate data into separate knowledge bases or caches without maintaining synchronization with the original source of truth. The roadmap that follows outlines practical steps to secure the data layer of AI deployments, aligning technical safeguards with responsible governance.

- Identify the Data: before integrating AI into business processes, map exactly which data the system can access. Take care of forgotten records, misclassified files, or outdated sensitive data to avoid privacy breaches, misinformation, or compliance issues.

- Control Data Ingestion: treat every dataset as though it were being shared externally. Apply classification and set rules for what AI tools may or may not ingest. This intentional onboarding prevents inappropriate use of business-critical or sensitive data.

- Implement Role-Based Access: AI output should reflect the same restrictions as source systems and revoking access to underlying data must also revoke indirect access through AI.

- Respect the Principle of Most Restrictive Data: When multiple data sources are combined, apply the strictest access policy across the board. Even seemingly harmless aggregated answers can inadvertently leak restricted information.

- Maintain a Single Source of Truth: Avoid duplicating or relocating data just to enable AI. If a knowledge base is required, link it to a canonical, actively monitored source and implement automatic update and management mechanisms.

- Expand Access Carefully: expanding access increases the scale of potential damage from leaks or misconfigurations. Implement necessary safeguards before rolling out AI to larger groups or customer-facing teams.

- Monitor, Test, and Adapt Continuously: AI systems evolve and so should your data protection strategy. Establish continuous monitoring for anomalies, conduct regression testing, and run regular audits. Treat AI like any other critical software: adaptable, testable, and always under review.

Guidelines for Model Management in AI Deployments

The AI models are at the heart of any AI application, but selecting and managing them is far from a one-time technical task. Mitigating risks associated with AI models requires organizations to treat model selection, deployment, and evolution as critical governance responsibilities.

A sound model strategy begins with selecting trustworthy, well-documented models from reliable providers, supported by transparent benchmarks and an understanding of performance boundaries. From there, deployment must be tailored to specific applications, accounting for the model’s intended tasks, exposure to proprietary data, and potential failure modes. Rigorous testing, performance evaluation, and version control are essential – particularly in environments where even small changes in model behavior can have downstream business or compliance implications.

- Choose Validated Models: favor models that are actively maintained, benchmarked, and open to external scrutiny. Selection should follow a clear auditing policy, not just convenience or hype.

- Consider Use Cases in Model Selection: different tasks require different models. Summarization, translation, coding, or Text-to-SQL all carry unique safety and performance profiles. Evaluate each model in the context of the specific use case it supports.

- Don’t Stop at Public Benchmarks: public benchmarks (e.g. MMLU, HELM, MT-Bench) are useful starting points, but they often fail to reflect real-world, whole solution performance. When models interact with internal data or workflows, create custom evaluation pipelines that reflect real-world context, end-to-end process.

- Use LLM Safety Benchmarks: for general-purpose assistants and chat tools, test against safety-focused benchmarks such as AdvBench, PoisonBench, or RobustBench. This help measure resilience against adversarial prompts, hallucinations, and misalignment before rollout.

- Handle Model Changes Carefully: upgrading to new models is tempting, but version changes must be treated as controlled processes. Validate replacements carefully to prevent regressions, especially in critical functions like search, recommendation, or legal drafting.

- Monitor Continuously: API providers often update models without notice, altering performance. Prioritize deployments with explicit versioning and monitor for drift over time.

- Opt for Exclusive Hosting to Reduce Risk: shared hosting is fine for pilots but risky at scale. For sensitive data or predictable performance, self-hosted or dedicated cloud instances provide stronger isolation and control.

- Review Plugins and Third-Party Models: integrations with plugins, copilots, or extensions add extra exposure. Confirm that submitted data isn’t used for retraining unless explicitly authorized. Review retention, privacy, and opt-out terms to protect proprietary information.

Mitigating User Risks in AI Implementations

Mitigating usage-related risks begins with the understanding that how AI systems are used can be as consequential as how they are built. Even the most secure models and curated data pipelines can be undermined by unchecked interactions, excessive tool access, or poorly governed workflows. Usage is where AI meets reality, and this layer demands continuous oversight, policy enforcement, and human-in-the-loop design.

To reduce these risks, organizations should implement guardrails that are both technical and procedural. This includes validating inputs and outputs, stress-testing AI behavior, limiting system permissions, and applying role-specific controls over AI tools and their capabilities. Just as importantly, teams must be educated on proper usage patterns and limitations of AI systems to avoid overreliance and misuse.

1. Build Guardrails Tailored to Your Use Case: generic model filters aren’t enough for enterprise needs. Add tailored safeguards such as regex filters, custom classifiers, prompt segmentation, and rate/cost controls to protect against unsafe or malformed inputs and outputs.

2. Use Feedback Loops and Human-in-the-Loop Oversight: guardrails must evolve. Stress test systems, gather real-world feedback, and keep humans in the loop for RAG pipelines, fine-tuning, and prompt strategies. Policies and filters should adapt to observed behavior over time.

3. Assign Agent Permissions Carefully: apply the principle of least privilege. Agents should only access what they need, with fine-grained permissions, task boundaries, and user confirmation for high-impact actions. Too much agency creates unnecessary risk.

4. Keep Prompts and User Content Apart: never blend user inputs with system instructions. Keep application logic distinct from user content to reduce vulnerability to prompt injection attacks. Use structured inputs instead of free-form chaining whenever possible.

5. Manage Expectations and Prevent Overreliance: LLMs may seem broadly capable but aren’t universally reliable. Set clear expectations, highlight limitations, and discourage overreliance for critical tasks. For statistical or predictive work, traditional ML models remain superior.

6. Detect Issues Early and Respond Quickly: as adoption grows, so does risk. Monitor prompts, usage patterns, and anomalies. Put in place circuit breakers, failover systems, and incident playbooks to quickly address abuse, leaks, or runaway costs.

AI Security Frameworks: Industry Best Practices

Several organizations and leading tech companies have developed AI security frameworks to help businesses deploy AI systems responsibly and securely. These frameworks offer structured approaches to managing risks across development, deployment, and operational stages, guiding organizations and teams towards safe AI implementations.

- MITRE ATLAS is an open, living, collaborative knowledge base and adversary model focused on threats to machine learning systems. It maps real-world attack techniques and helps organizations assess their exposure and response strategies.

- NIST AI Risk Management Framework (RMF) offers a comprehensive guide developed by the U.S. National Institute of Standards and Technology to help organizations manage risks in the AI systems implementation and use. It proposes a governance model based on risks identification, continuous assessment and management.

- Google Secure AI Framework (SAIF) outlines six pillars for securing AI, including automating defenses and adapting platform controls. SAIF encourages secure-by-default AI deployment through strong foundations and comes with an implementation guide.

- Databricks AI Security Framework (DASF) maps out 62 AI risks across development, deployment, and operation, offering 64 practical mitigations and controls to support collaboration between security, governance, and data teams.

- Snowflake AI Security Framework provides a broad threat catalog and recommended mitigations for protecting AI systems. Focuses on evaluating system exposure and implementing proactive defense strategies.

Real-World Examples of Business Consequences from Unsafe AI

To highlight the importance of having adequate guardrails for AI implementations, here’s several real-world incidents that led to severe financial, reputational, legal, and operational consequences for businesses.

Financial Losses

- An Air Canada customer successfully persuaded the airline’s AI chatbot to promise an unauthorized refund, which the company was then obliged to honor. This case highlights how AI-generated content can become legally or financially binding when not properly guarded.

- A Chevrolet dealership’s chatbot was manipulated into offering a car at a drastically reduced price, exposing how AI agents lacking business rule enforcement can be financially exploited.

- Google’s parent company, Alphabet, experienced a $100bn stock price drop after a Bard AI demo produced incorrect information, demonstrating how public AI missteps can directly impact market valuation.

Reputational Damage

- Delivery firm DPD had to disable its AI chatbot after users manipulated it into criticizing the company itself – highlighting the brand risk from uncontrolled AI interactions.

- Snapchat’s “My AI” chatbot gave unsafe or inappropriate responses to users, prompting public backlash and trust erosion, especially concerning applications targeting younger audiences.

- In 2025, Google was forced to apologize after its image classification AI misidentified a black couple, illustrating the lasting reputational harm from unaddressed bias in AI systems.

Legal and Regulatory Liabilities

- The Equal Employment Opportunity Commission in the U.S. (EEOC) settled a lawsuit with iTutorGroup over an AI tool that systematically rejected older applicants, reinforcing that algorithmic bias can carry legal consequences.

- The FTC took enforcement action against retail pharmacy Rite Aid for improper use of its facial recognition technology, marking an increase in AI-related regulatory scrutiny.

- The New York Attorney General investigated UnitedHealth’s use of a biased medical decision-making algorithm, pointing to growing legal oversight in AI applications in critical sectors.

Operational Disruptions and Security Breaches

- Samsung employees unintentionally leaked sensitive source code and documents by pasting them into ChatGPT, emphasizing the importance of internal AI usage policies.

- In July 2024, Delta Air Lines experienced a massive operational disruption, canceling over 7,000 flights and affecting 1.3 million passengers. The incident was triggered by a faulty update from cybersecurity firm CrowdStrike to its Falcon Sensor software, which is integrated with AI capabilities for threat detection. The event underscores the risks associated with AI-integrated security tools and the importance of robust testing and contingency planning.

Navigating AI Regulations: Security, Standards, and Responsibility

As AI adoption accelerates, organizations face not only technical challenges like preventing data leaks, model hallucinations, or prompt injection, but also the broader responsibility of building secure and ethical systems. Frameworks such as the OWASP guidelines provide valuable tactical protections, while ISO/IEC 42001 introduces a holistic approach that integrates AI governance into organizational risk management.

Meanwhile, regulation is evolving worldwide. The EU AI Act sets strict rules for high-risk applications, the U.S. relies on frameworks like NIST’s AI RMF and its AI Bill of Rights, while countries from Canada to China are defining their own strategies. The global trend is clear: compliance, accountability, and fairness are becoming inseparable from AI innovation.

For organizations, this means going beyond “checkbox security” and embedding responsible AI practices into product development from the start.

Summary

Over last 3 years, companies developed hundreds of GenAI based pilots that address a variety of use cases. As organizations try to move the most valuable pilots into full-scale production, the temptation to deploy them quickly is understandable, but doing so can potentially be costly and risky. Business leaders must resist the urge to rush adoption without first building a thoughtful, structured approach to safe AI deployments, Techniques used during pilot stage with limited data and users, will not be enough during production use.

Organizations should aim for unified strategies that govern how AI systems are selected, implemented, and monitored. Ad hoc adoption: whether through unofficial plugins, team-level tools, or shadow deployments, can undermine even well-intentioned security policies. Instead, responsible AI integration begins with clear policies, company-wide alignment, and ongoing monitoring.

At C&F, this perspective is rooted in our direct experience supporting real-world safe AI implementations. The insights shared in this paper reflect not only industry guidance, but the challenges and lessons we’ve observed firsthand across sectors.

A secure AI deployment is not a one-time effort but a continuous process. That means:

- Curating and auditing data sources.

- Choosing trustworthy models and providers.

- Implementing guardrails for input and output.

- Educating users on responsible use and managing expectations.

- Monitoring performance and risks, even long after launch.

Would you like more information about this topic?

Complete the form below.